< Back to Index Posted: Nov 4th 2024

Speed Limiters

Last week a new AI demo went viral - this time, it was Oasis, an interactive demo that can take your keyboard inputs and combine them with a model that has been trained on footage of Minecraft. The result is a weird fuzzy hybrid somewhere between a videogame and a movie: the video/image generator tries to predict the next frame based on what’s currently on screen, but combined with what the player is pressing on the keyboard. If you press forwards, the next frame will be generated as if you had walked forwards a little bit in the game. A lot of people had opinions about this.

Often we get confused about what exactly an AI demo is trying to promote. In the case of Oasis, the generative Minecraft toy, it’s actually promoting the speed at which it can generate frames more than anything else. Etched, the company behind the demo, is manufacturing a specialised chip that has been optimised for running transformer models, a popular architecture for solving modern machine learning tasks. A few years ago the idea of specialised AI hardware was talked about a lot as the future, but in the end nVidia hoovered up a lot of the market and then everyone decided they’d rather pay a subscription to OpenAI than make their own thing. But companies like Etched want their chips to become the focal point of AI engineering, and need impressive demos to show what they’re capable of.

Everyone’s entitled to a bad opinion about a thing - I enjoyed having my bad opinion on this when it came out. You don’t need to read anything I’m about to say, but I wanted to talk a bit about two issues with the AI industry today that surfaced again for me when looking at the response to Oasis. The first is that this demo is technically impressive and that even if you don’t think it’s very good you’ve got to admit it’s an achievement. The second is that this demo is just the beginning and it will continue to get better, cheaper and easier to do this sort of thing over time. Both of these lines are very familiar to me, and made me think about how we ended up here over the last decade.

500,000,000,000

A lot of things have happened over the last decade that seemed, at some point, to be so hard that we would never see AI achieve it. We’re approaching the tenth anniversary of AlphaGo beating Lee Sedol at the game of Go, after which we saw achievements with AI tackling games like DOTA 2 and Starcraft, making advances in biochemistry and algorithm design, and massive improvements to tasks such as natural language comprehension. Some of these advances have come from new technical innovations. The transformer, for example, was an important change in how certain AI models were structured. These advances have shifted the way (some) AI systems are built, and made important contributions to the field.

Some of the advances we’ve seen, however, have come through massive investment. Some estimates I’ve seen suggest that in the past eight years there’s been over half a trillion dollars of private investment into AI around the world. This has two important effects. First, some results were only really obtainable at the time through huge amounts of resources being leveraged. The DOTA 2 and Starcraft results - both hugely flawed in their own ways but also massive headline-making achievements - stand out as examples of this, where the results were obtained through eye-watering amounts of time and resources investment into a problem. People defending these results say what they were also saying this week about Oasis: it’s okay to spend a lot to obtain these results now, because over time they’ll become cheaper. We’ll come back to that point later.

The second important effect investment has is that once you spend money somewhere, you’re not spending it somewhere else. When we look at the results we’re getting from AI companies today, we’re not just assessing them in isolation. We’re comparing them against other things that might have happened were that money invested elsewhere. What could you do with half a trillion dollars? Maybe you’d invest it in climate change research? Cold fusion and space travel? A really nice water park? Is what we're getting from this dedication of time and resources actually worth it?

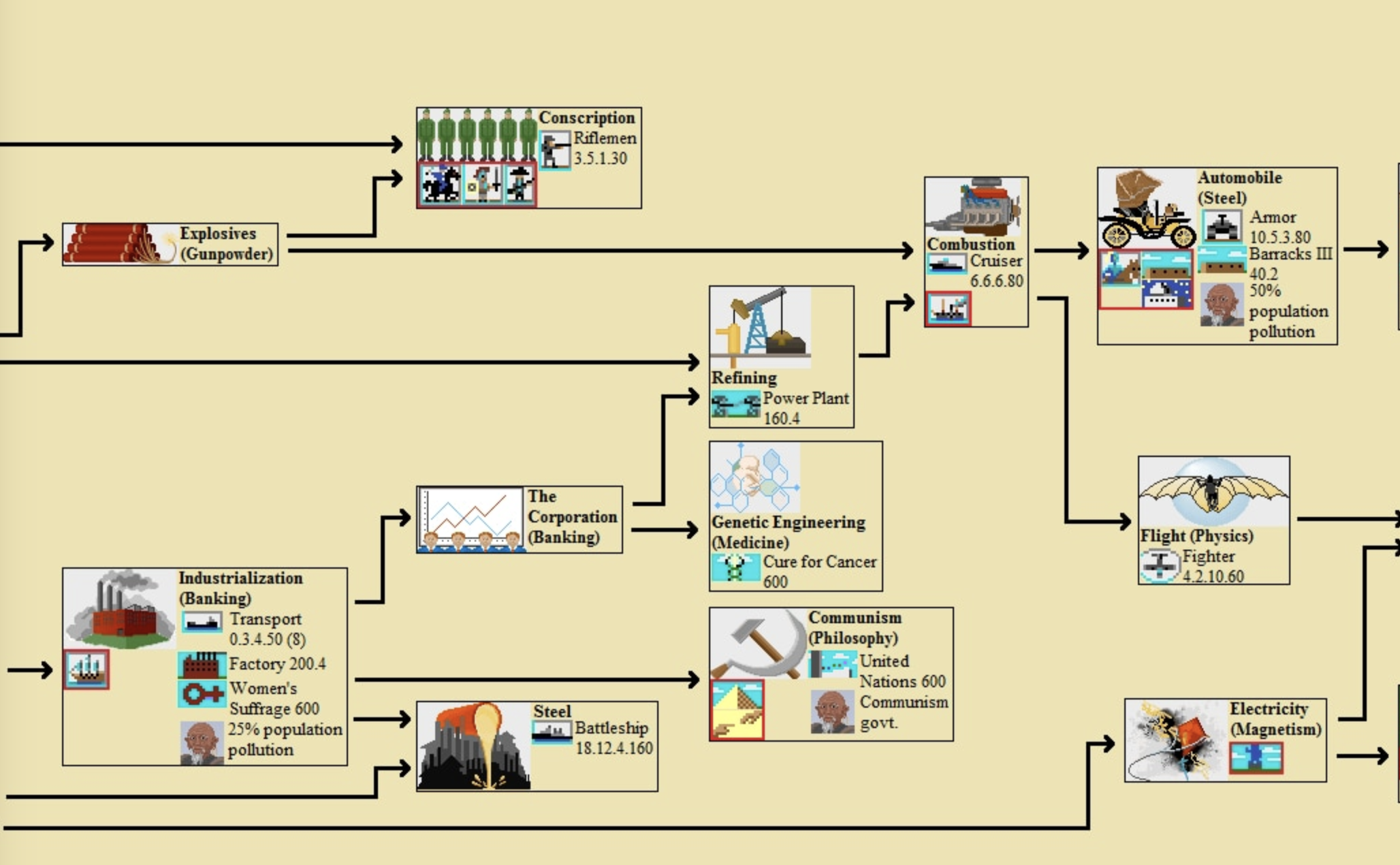

For me, it’s not even a case of spending the money outside of AI - what I think about is what all the other subfields within AI might have waiting to be discovered in them. There are a lot of ways to solve a problem with AI. One way to beat a human at Go would be to simply memorise every possible board state in Go. We don’t try and do that for a number of reasons, one of which being that there are more possible board states than there are atoms in the universe. But there are plenty of more plausible techniques that, if you pumped enough money into them, would eventually yield a win against the best humans. How much money is enough money? What breakthroughs might we have made in areas like computational evolution or genetic programming if we had decided to make it the center of the boom instead of neural networks? Half a trillion dollars buys a lot of monkeys and a lot of typewriters.

We talk about scientific progress as if it is a linear path of results, each one better than the last. As a result of this mental model, anyone who gets a result further down the path is doing something good, because it’s getting us closer to our end goal of The Future. There’s no sense of whether it was a good or a bad path to take, because we don’t have a sense, as a society, of alternate paths existing. In the media, pop science and the news, the history of science is discussed and understood as a series of discoveries that were uniformly better or truer than the things that preceded them. The idea that we might have wasted a decade of scientific funding trying to fit a square peg in a round hole is simply not entertained.

Is Oasis technically impressive? It depends who you ask. I think its description as a ‘game engine’ or an ‘AI-generated game’ is beyond misleading, it’s technically nonsensical. However technically impressive you find it, though, the question we don’t often ponder about systems like this was ‘is it worth it’. Not just in the sense of what this particular system cost to make, but the investment - of time, money and attention - that led up to it being possible.

Airspeed

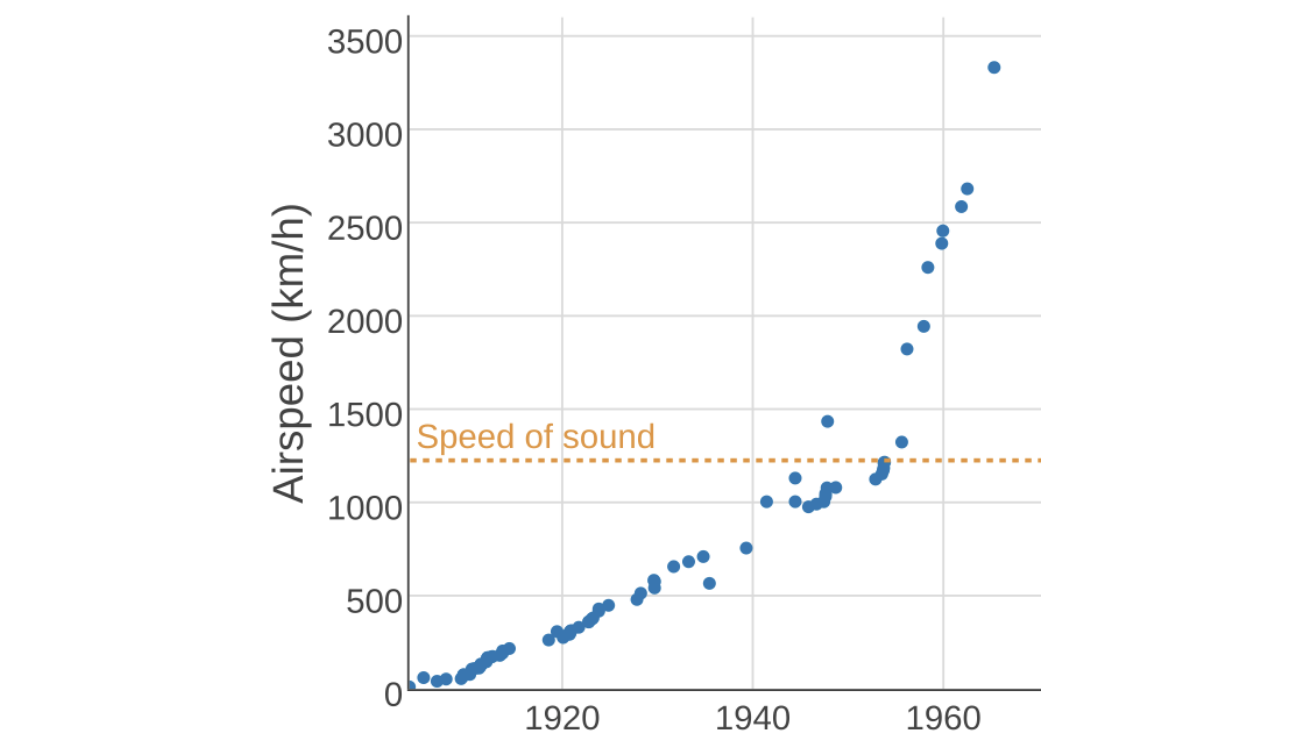

One of the people replying to me in defence of Oasis, for some reason, suggested that I would have complained about the Wright brothers’ first flight only going 200m. Of course, now planes can fly a lot further than that. They’re also a lot faster than the Wright brothers’ first attempt at getting off the ground. There’s a long history of trying to beat the current speed record for how fast a plane (or a car, or a train) can go. Here’s a graph of all those records for airspeed, up to around 1960 or so (thanks Wikipedia!)

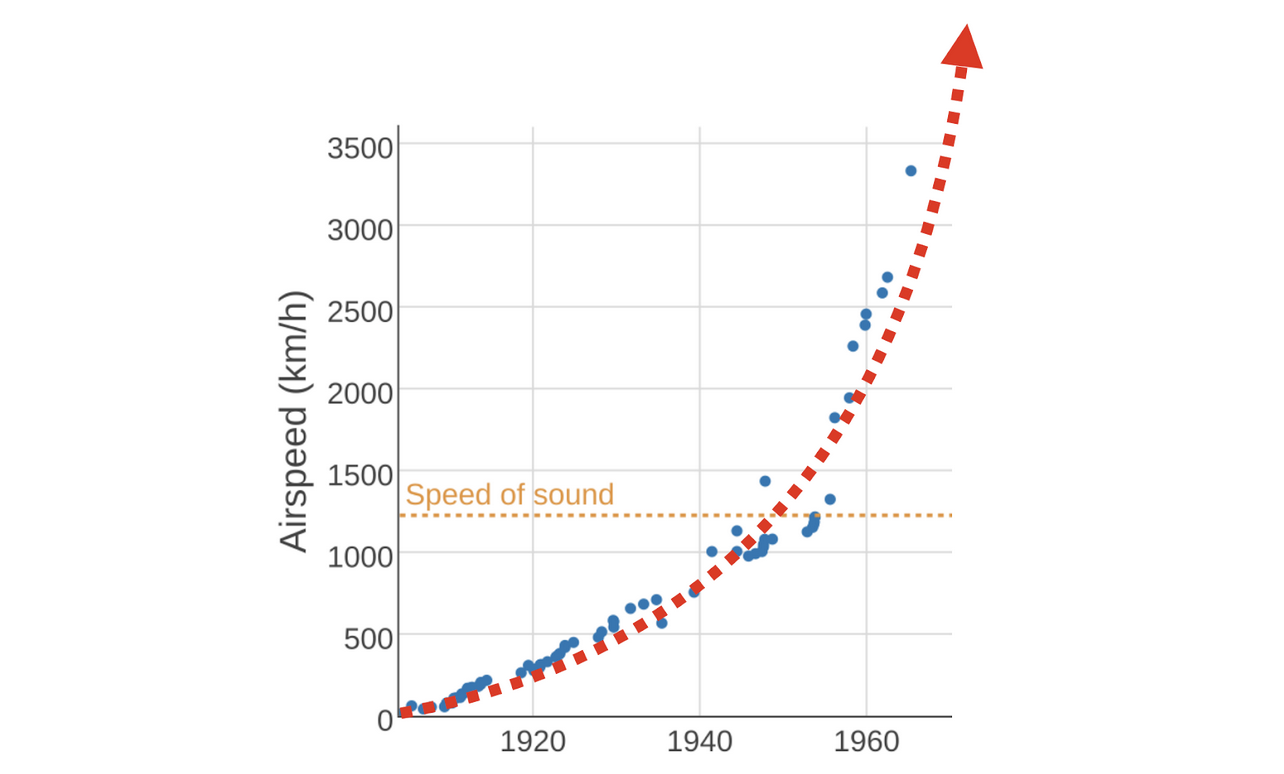

You can see the impact that the invention of the jet engine had on how fast planes could travel - it’s quite remarkable. In fact, it almost fits an exponential curve, which is really exciting. Look, I've sketched a convincing one over the data here:

What’s really exciting about this is that if you follow the trendline you can see that around the year 1980 we exceeded the speed of light, which is why in 2024 we are well into colonising the galactic core on a fleet of ark ships and preparing to expand beyond the stars. If, for some reason, you are not reading this from a space station orbiting Alpha Centauri, then I guess that means this trend didn’t continue infinitely for some reason, which is a huge bummer.

Trends make sense! We rely on patterns to get us through our day and our lives. We also see technology progress a lot, and just like our stories about scientific discovery and eureka moments, we also share this idea that technology is always improving and strictly getting better at all times, and will do so forever. If someone has found a technique to make something possible, its cost is irrelevant: we will simply do more of it until it gets cheaper, better and faster. The Internet is faster than it was two decades ago, digital cameras are higher-resolution, 3D graphics are more detailed. Why wouldn’t AI work the same way?

In reality there’s a lot of reasons why trends stall out. Sometimes an approach hits a ceiling it can’t get past without an advance happening somewhere else. Sometimes what looked like a continuous improvement was actually just a sharp, short-term gain that didn’t scale. Sometimes it just becomes too costly to invest in further economies of scale: think of all the technologies that we know are beneficial to our society but that are too expensive for governments to want to invest in implementing. Even companies drowning in investment like OpenAI or sitting on tech profits like Google still have limits on what they are willing to invest, what they are willing to risk and what they are willing to lose.

There are also theoretical and practical limits to work too. Oasis was trained by watching footage of Minecraft a lot, and predicting how the next frame would look based on the current one. How much more efficient could we make this process? Could Oasis learn a model of this game from a single screenshot? Of course not. From ten screenshots? If not, how many would it need to see? How much footage? Oasis' limitations are waved off as simple a problem of polish and investment, but the fact is that every missing feature or quirk requires some kind of investment to fix - time, money, cycles, data - and it may not scale as perfectly as we hope. There is a minimum amount of information we need to recreate a game like Minecraft. For example, the slime mob in Minecraft only spawns in the Swamp biome, only between a world height of 51 and 69, and the chance of it spawning is affected by the in-game phase of the moon. How much footage would be needed for the model to capture this with no other information? And is it actually useful to build a system that can do this?

Machine learning models can seem magic, and they can lead us to think that anything is possible if we put enough into it. But there are theoretical limits. The airspeed record capped out because people lost interest in going faster, and it had fewer practical uses. But there are also theoretical limits too - the Earth’s atmosphere applies increasingly harsh barriers the faster you go, long before you reach hypothetical problems like the speed of light. For AI, there are entire fields of study looking at statistical confidence levels, information theory, algorithmic complexity and model inference. We can make processes more efficient, yes, and with enough money we can make incredible things happen. But there is not always a way to make it faster, cheaper or more widely available. Not everything scales forever.

Mirages

It’s fine to look at Oasis and think it’s cool. I get it, and look we're pretty deep into the AI wave at this point that a lot of younger people don't remember what it looked like before. We’re so far gone with the current AI boom that these kinds of thing don’t matter any more. I’m not trying to change anyone’s mind or tell them they’re bad for enjoying this stuff. There was a point where that kind of thing mattered, but we’re past it now and the only way the bad stuff in the world of AI is going to stop is with an industry-wide crash. So if you like Oasis and you think I’m wrong and you want to let me know, please feel free to write a tweet in your text editor, print it out, leave it on your windowsill and let the wind carry it to me. Or just don’t write it at all. It’s okay, I promise.

I wrote this to try and articulate why the common things I see about any AI result - “you have to admit its impressive” or “it’ll get cheaper in the future” - aren’t always obviously true. They might be in some cases. But assuming they are true generally of all technological products is why we are in the state we’re in now, and why there is such a dearth of critical thought in response to AI. Technological progress is a branching network of paths that split and rejoin at different points, and right now we are cheering on a small group who are making all the choices for us. Sometimes you’ll agree with them. Sometimes they’re making the best choice possible. But there’s no need to assume they are correct just because you’re being told they are.

Thanks to K for reading over a draft of this and giving some feedback.

Posted November 4th, 2024